Location data is extremely sensitive, revealing where we spend how much time, what we like to do in our free time, and how we spend our money. Our new study titled “Where you go is who you are – A study on machine learning based semantic privacy attacks” sets out to answer a critical question: Just how much “semantic information” – that is, detailed and potentially sensitive insights into personal behavior – can be extracted from someone’s location data using machine learning techniques, especially when this data might be inaccurate or incomplete?

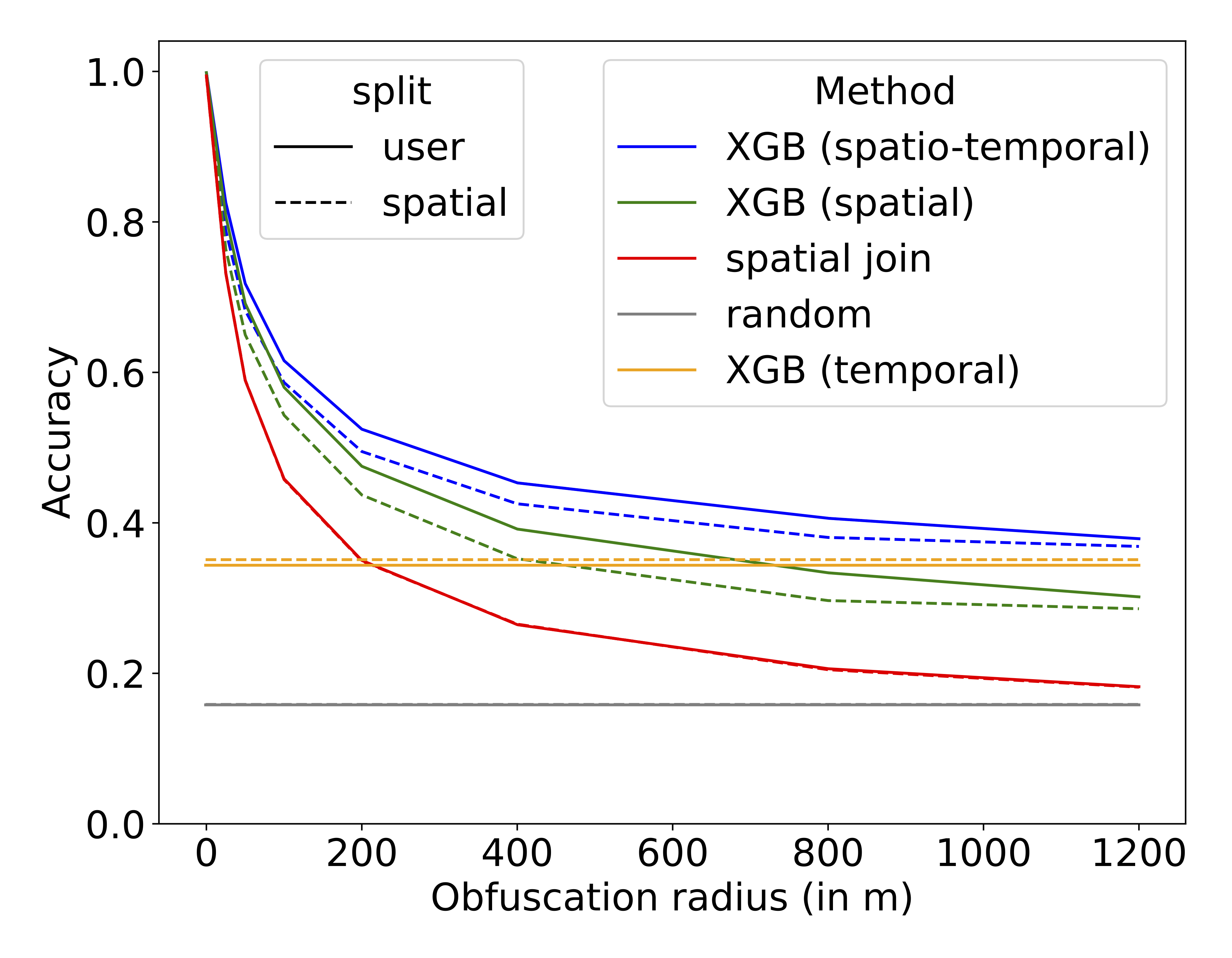

To address this question, we conducted a study using a dataset of location visits from a social network, Foursquare, where the category of the location (e.g., “dining” / “sports” / “nightlife”) is known. We simulate the following scenario: An attacker only knows the geographic coordinates and time of a location visit, and trains a machine learning model to infer the place category. Crucially, the geographic coordiates can be imprecise due to GPS inaccuracies or protection measures. This is simulated by obfuscating the true geographic coordinates with varying radius.

Our experiments reveal a significant privacy risk even with imprecise location data. Although the accuracy of the attacker decreases exponentially with the obfuscation radius, there remains a high privacy loss even if the coordinates are perturbed by up to 200m. A certain risk remains from the temporal information alone. These findings highlight the privacy risks associated with the growing databases of tracking and spatial context data, urging policy-makers towards stricter regulations.

Check out our paper and source code!