Our new paper entitled “Incorporating multimodal context information into traffic speed forecasting through graph deep learning” is now online at IJGIS.

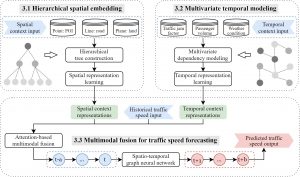

In this work, we propose a multimodal context-based graph convolutional neural network (MCGCN) model to fuse context data into traffic speed prediction, including spatial and temporal contexts. The proposed model comprises three modules, i.e., (a) hierarchical spatial embedding to learn spatial representations by organizing spatial contexts from different dimensions, (b) multivariate temporal modeling to learn temporal representations by capturing dependencies of multivariate temporal contexts and (c) attention-based multimodal fusion to integrate traffic speed with the spatial and temporal context representations for multi-step speed prediction. We conduct extensive experiments in Singapore. Compared to the baseline model (STGCN), our results demonstrate the importance of multimodal contexts with the mean-absolute-error improvement of 0.29 km/h, 0.45 km/h and 0.89 km/h in 30-min, 60-min and 120-min speed prediction, respectively. We also explore how different contexts affect traffic speed forecasting, providing references for stakeholders to understand the relationship between context information and transportation systems. Check out the open-access paper online!